All of today’s quantum computers are prone to errors. These errors may be due to imperfect hardware and control systems, or they may arise from the inherent fragility of the quantum bits, or qubits, used to perform quantum operations. But whatever their source, they are a real problem for anyone seeking to develop commercial applications for quantum computing. Although noisy, intermediate-scale quantum (NISQ) machines are valuable for scientific discovery, no-one has yet identified a commercial NISQ application that brings value beyond what is possible with classical hardware. Worse, there is no immediate theoretical argument that any such applications exist.

It might sound like a downbeat way of opening a scientific talk, but when Christopher Eichler made these comments at last week’s Quantum 2.0 conference in Rotterdam, the Netherlands, he was merely reflecting what has become accepted wisdom within the quantum computing community. According to this view, the only way forward is to develop fault-tolerant computers with built-in quantum error correction, using many flawed physical qubits to encode each perfect (or perfect-enough) logical qubit.

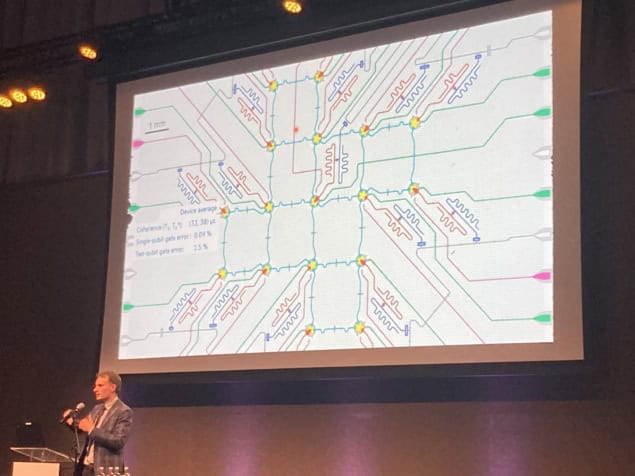

That isn’t going to be easy, acknowledged Eichler, a physicist at FAU Erlingen, Germany. “We do have to face a number of engineering challenges,” he told the audience. In his view, the requirements of a practical, error-corrected quantum computer include:

- High-fidelity gates that are fast enough to perform logical operations in a manageable amount of time

- More and better physical qubits with which to build the error-corrected logical qubits

- Fast mid-circuit measurements for “syndromes”, which are the set of eigenvalues that make it possible to infer (using classical decoding algorithms) which errors have happened in the middle of a computation, rather than waiting until the end.

The good news, Eichler continued, is that several of today’s qubit platforms are already well on their way to meeting these requirements. Trapped ions offer high-fidelity, fault-tolerant qubit operations. Devices that use arrays of neutral atoms as qubits are easy to scale up. And qubits based on superconducting circuits are good at fast, repeatable error correction.

The bad news is that none of these qubit platforms ticks all of those boxes at once. This means that no out-and-out leader has emerged, though Eichler, whose own research focuses on superconducting qubits, naturally thinks they have the most promise.

In the final section of his talk, Eichler suggested a few ways of improving superconducting qubits. One possibility would be to discard the current most common type of superconducting qubit, which is known as a transmon, in favour of other options. Fluxonium qubits, for example, offer better gate fidelities, with 2-qubit gate fidelities of up to 99.9% recently demonstrated. Another alternative superconducting qubit, known as a cat qubit, exhibits lifetimes of up to 10 seconds before it loses its quantum nature. However, in Eichler’s view, it’s not clear how either of these qubits might be scaled up to multi-qubit processors.

Why error correction is quantum computing’s defining challenge

Another promising strategy (not unique to superconducting qubits) Eichler mentioned is to convert dominant types of errors into events that involve a qubit being erased instead of changing state. This type of error should be easier (though still not trivial) to detect. And many researchers are working to develop new error correction codes that operate in a more hardware-efficient way.

Ultimately, though, the jury is still out on how to overcome the problem of error-prone qubits. “Moving forward, one should very broadly study all these platforms,” Eichler concluded. “One can only learn from one another.”