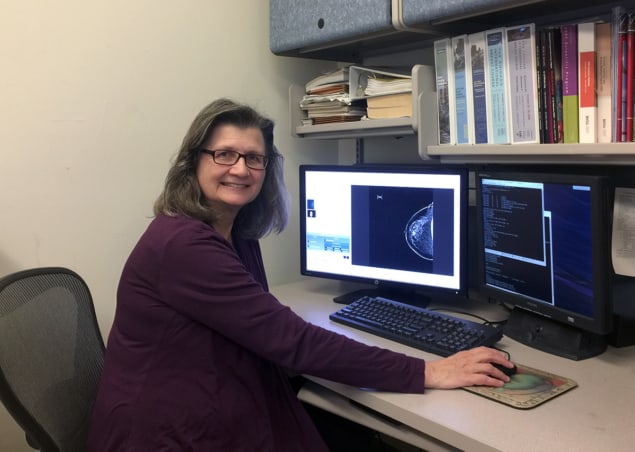

Medical physicist and entrepreneur Maryellen Giger talks to Margaret Harris about how she established the use of AI in breast cancer imaging

For more than 30 years, Maryellen Giger has worked on using artificial intelligence (AI) to improve the accuracy of medical imaging, specifically when it comes to cancer. Indeed, Giger is a pioneer in developing artificial neural networks that allow computers to identify, classify and diagnose medical images, be it radiographs, MRIs or CT scan images. Here, she talks about her physics background, how she established the use of AI in breast cancer imaging, and about how she got involved in the first companies that received approval from the Food and Drug Administration (FDA) for computer-aided cancer detection and diagnosis systems.

What sparked your initial interest in medical physics?

I was always interested in maths and physics growing up, and I majored in the subjects as an undergraduate. I went to Illinois Benedictine College, just outside Chicago. While it was a small university, it had great internship opportunities, and I spent three summers working at the Fermi National Accelerator Laboratory (Fermilab). At that time they had a neutron-therapy system, so I spent one summer working on an assembler, where I programmed some of the temperature controls within the centre. The other two summers I worked more on the hardware of beam diagnostics, and that’s how I found medical physics.

After my undergrad I was given the opportunity to go to the University of Exeter in the UK, in 1979. At Exeter, they had a project looking at the electrocardiograms for sudden infant death syndrome (SIDS, also known as cot death or crib death). While there, I created the electrocardiogram system, programmed it in an assembler, which I luckily knew already, and wrote my MSc dissertation on the topic, looking at whether there was a type of rhythmic change among the subjects.

What did your PhD work focus on?

When I came back from the UK, I began my PhD at the University of Chicago, which covered diagnostic imaging physics, as well as therapy imaging physics. I decided I wanted to go into the diagnostic end. We learned all the physics that goes into the whole imaging process – from what kind of source would be used in a device, to types of detectors, to what happens after you get an image, and even how to present the final report to a patient. Because if you don’t optimize all the steps, the process isn’t going to work. My dissertation research was evaluating the physical image quality of digital radiographs, which, interestingly, are the only type of radiograph most people are accustomed to now – but back then in the early 1980s, we started with screen films. It would take an hour to digitize a single chest radiograph – a process that is now relatively instantaneous.

Following my PhD, I spent a few years as a postdoc, and then became faculty at the university’s department of radiology. I worked on analysing chest radiographs to detect lung nodules, and after that worked on screening mammograms to detect mass lesions. While a chest radiograph used to take an hour to digitize, it would take four hours to process. That eventually led to us developing computer-aided detection algorithms for medical analysis.

How did you and your colleagues pioneer the use of AI in breast-cancer imaging?

The term computer-aided diagnosis started with us in the 1980s and 1990s, which then segmented into computer-aided detection (CADe), and computer-aided diagnosis (CADx). Once a digital image of a mass has been captured, we need to interpret it – something that is usually done by radiologists, who are making qualitative judgements based on their experience and knowledge. A digital image contains a lot of information – for example, the size and irregularity of a tumour can be estimated by a radiologist – but an AI algorithm can calculate this quantitatively. This can help radiologists catch cancer quicker, and aids clinicians to make more informed diagnoses. What we do is teach the AI how to analyse an image and what to look for.

A digital image contains a lot of information. We teach the AI how to analyse an image and what to look for

To understand the difference between detection and diagnosis, you can think of the Where’s Waldo? books. Screening mammography can be thought of as being a thousand-page book, in which you have to find Waldo, who is only on five of those pages – and you have to do this in a finite amount of time. So CADe is having a computer to help you find items that have red and white stripes. Then once you find these, you have another program – CADx – that will help you determine whether those stripes are something random, like a bucket, or if they are indeed Waldo. CADx was always supposed to be used together with a radiologist, who would first look at the case and make a decision. The computer would then serve as a second reader, mostly to catch something that may have been missed.

What was the process in developing the first FDA-cleared computer-aided breast-cancer detection system?

I learnt early on that if you really want to get your product to patients, you must protect your idea by taking out a patent, and then licensing it. There’s a lot of money involved in developing any idea or product, and that’s the only way a company will invest. In 1990 we patented our CADe method and system to detect and classify abnormal areas in mammograms and chest radiographs. These were later licensed by a company called R2 Technologies (which was acquired by Hologic in 2006). By 1998 it had translated our research, and its further developments, into the first FDA-approved CADe system, called ImageChecker.

Translation of your lab’s research also led to the first FDA-cleared CADx system to aid in cancer diagnosis – tell me about this.

In breast cancer screening, if something suspicious is found in the image, the patient may undergo another mammogram, or an ultrasound or MR scan. You end up with images from multiple modalities, and the radiologist then has to assess the likelihood that the lesion is cancer, decide whether to request a biopsy and how quickly to follow up. So we wondered how a computer could help process all that information to aid the radiologist in their decision-making process. With breast MRIs, we quantitatively extracted various image characteristics, similar to what radiologists observe, and then we created algorithms, trained and validated them. We performed a reader study in house and showed that radiologists performed better if they were given this aid.

We began the translation of our research development and findings in 2009, through the New Venture Challenge at the Chicago Booth School of Business. The team included two MBA students, one medical student, and a medical-physics student from my lab. Out of 111 teams, we made it to the final nine. After the challenge, we created a company, Quantitative Insights (QI), which was later incubated at the Polsky Center for Entrepreneurship and Innovation. QI conducted a clinical reader study, with multiple cases and manufacturers, which was submitted to FDA.

In 2017 QI received clearance for QuantX, the first FDA-cleared machine-learning-driven system to aid in cancer diagnosis. The system analyses breast MRIs and offers radiologists a score related to the likelihood that a tumour is benign or malignant, using AI algorithms based on those we developed. Soon after, Paragon Biosciences – a Chicago-based life-science innovator interested in AI research – bought QuantX. In 2019 Paragon launched Qlarity Imaging, and units are now being sold and placed. I’m an adviser for the company.

How do you see AI being used in medical imaging in the future?

There are many needs in medical imaging that could benefit from AI. Some AI will contribute to creating better images for either human or computer vision, for example, in developing new tomographic reconstruction techniques. For interpretation, AI will be used to extract quantitative information from images, similar to what we did for CADe and CADx, but now also for concurrent reader systems and ultimately autonomous systems.

There are also many ancillary tasks that AI could help streamline for improved workflow efficiency, such as assessing whether an image is of sufficient quality to be interpreted, maybe even while the patient is still on the table. Then during treatment, monitoring the patient’s progress is important. Thus, using computer vision AI to extract information from, for example, an MRI could yield quantitative metrics for therapeutic response. I believe in the future, we need to watch AI grow and continue to develop AI methods for various medical tasks. Much still needs to be achieved.

What are some of the skills that you learnt during your physics degree that you use all the time? And what other skills did you have to develop on the job?

Two major skills required in my work are running a lab, and translating research. During your degree, you are not really trained on how to run and manage a lab. We have to work as a team, and around the scientific table we are all equal. You can learn this as a student by watching others, and seeing what works and what does not. You learn by your mistakes too, and now I try to tell others about it, but they all have to find their own path.

When it comes to successful research and translation, it’s really important to talk to everyone involved in a project. For us that meant understanding the aspects of the medical-imaging process, the algorithms and computation, the validation and testing, and talking to clinicians to aim for clinical impact. Thanks to being part of the Polsky Center, we received a lot of free advice as well as funding as we translated our research prototype into a commercial product. We also benefited from the Chicago Mentors group, which put us in touch with established industry people in different roles – marketing, legal, even a chief executive – and we could ask them all our questions.

What’s your advice for today’s physics students?

You have to love what you’re doing, so make sure whatever job or area of research you choose is something you really enjoy. I enjoy my job so much that it’s not just work. If I have free time in the evening, I’ll often read a scientific paper in the field or review research findings from the lab. I do work very hard, but what’s enjoyable is to sit back and bounce ideas off colleagues. My favourite time of the week is when I meet with one or two students or colleagues in the lab. We review data, and think, and question, and that’s how a new idea is formed. I enjoy teaching students in my lab and try to show them how to become independent investigators. I always tell them to look for red flags, and that it’s fine to make mistakes – we all do. I would also say that I benefited greatly from being mentored by many folks. And my most rewarding moment is when a student becomes a colleague.